D

Deleted member 15652

Guest

Hi Martin, and everybody here!

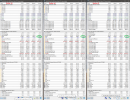

Here is screenshot of my PC running Cinebench R23 MT test. I've merged two sensor panel screenshots from different modes.

Can someone explain, why reported core VID's values are

- all the same and close to Vcore SVI2 value (normal mode - left panel)

- all different and unrealistically low.

Thanks!

_______________________________________________________________________

Ryzen 5 5600X, ASRock B550 Extreme4 ( agesa 1.1.0.0 patch D, smu 56.40 )

Here is screenshot of my PC running Cinebench R23 MT test. I've merged two sensor panel screenshots from different modes.

Can someone explain, why reported core VID's values are

- all the same and close to Vcore SVI2 value (normal mode - left panel)

- all different and unrealistically low.

Thanks!

_______________________________________________________________________

Ryzen 5 5600X, ASRock B550 Extreme4 ( agesa 1.1.0.0 patch D, smu 56.40 )