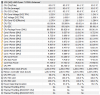

Just the PPT of 45W under 100% load indicates something fishy is going on here.

I've updated to the latest Bios available for the Taichi (UEFI 3.0)

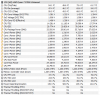

Fresh Boot, nothing except wallpaper engine running

Cinebench R20 running

It seems to me the data is being reported more accurately at this point, which means older bios definitely is doing something strange compared to the latest released ones.

-- I'd also like to note my Memory at this test is runing at 2133 instead of 3200 since that was apparently reset AGAIN upon flashing my bios

-- -- Enabled XMP for 3200 MHz RAM again, no change in values from the latest ones posted, so it seems to be good now.

Last edited: