jhelmer25

Member

Hello all, I am turning to this community because I am at a total loss.

I recently built a new PC that randomly reboots and/or crashes fairly frequently. It reboots/crashes without warning (i.e. no BSOD, and (seemingly) no Windows Event Viewer logs that point to a problem outside of the "Critical Kernel-Power (41)" error as a result of the crash itself). There is no consistency to when it crashes. I went 5 days with no crash, and just had 2 crashes today.

These crashes do not appear to be related to system load. they will occur when I am gaming, but also when I am just browsing the web (i.e. watching YouTube). I cannot say 100% that I have had it crash at idle, or when there wasn't some form of video playing. This would be difficult to validate because I don't use the PC for other purposes very frequently, or for a long enough usage period to cause a crash.

Things I have tried:

- Disabling automatic restarts on system failure, in hopes to get a better read (still restarts w/o notification)

- Undoing/redoing all cable connections

- Getting all OS + driver updates (latest drivers direct from manufacturer)

- Updating to the lastest BIOS

- Replacing cables and reseating hardware

- Running a complete memtest86 (100% pass with 0 failures)

- Disabling all "performance" features in BIOS for vanilla boot configuration

- Replacing PSU with a brand new unit

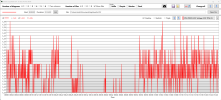

I have been actively monitoring the temperatures and load for the CPU, GPU, memory and storage. Everything is running within a reasonable range. I have attached a log file after the latest crash, which occurred after a gaming session (no games were running during the crash). If you go back ~30m you will see the system under load while playing Cyberpunk at max settings. The metrics are still all good, and since the crash occurred in even more optimal ranges, it is even more mysterious.

I used GenericLogViewer (v6.4) to look at the graphs from 1 hour prior to the crash (see screenshot), and nothing looks anomalistic - clicking through every sensor metric.

I am truly hoping that someone in the community can spot something I am not, or offer other suggestions. The only remaining things I can think to do are, replacing the MOBO, GPU and CPU, but I am truly hoping it doesn't come to that.

System specs:

- Motherboard: ASUS TUF Gaming B650-PLUS WiFi Socket AM5

- CPU: AMD Ryzen 7 7800X3D

- GPU: ZOTAC Gaming GeForce RTX 4070 Ti AMP Extreme AIRO

- RAM: CORSAIR VENGEANCE RGB DDR5 RAM 64GB (2x32GB) 6000MHz CL30

- Storage: Corsair MP700 2TB PCIe Gen5 x4 NVMe 2.0 M.2 SSD

- PSU: be quiet! Dark Power 13 1000W Quiet Performance Power Supply | 80 Plus Titanium Efficiency | ATX 3.0 | PCIe 5

- OS: Windows 11 Home Edition (although this issue also occurred with W10 before I upgraded).

PLEASE HELP! I am going crazy because the $$$ for this system is causing so much anxiety and I don't know what to do. Whoever solves this, I will buy you lunch ;-)

Thanks in advance.

I recently built a new PC that randomly reboots and/or crashes fairly frequently. It reboots/crashes without warning (i.e. no BSOD, and (seemingly) no Windows Event Viewer logs that point to a problem outside of the "Critical Kernel-Power (41)" error as a result of the crash itself). There is no consistency to when it crashes. I went 5 days with no crash, and just had 2 crashes today.

These crashes do not appear to be related to system load. they will occur when I am gaming, but also when I am just browsing the web (i.e. watching YouTube). I cannot say 100% that I have had it crash at idle, or when there wasn't some form of video playing. This would be difficult to validate because I don't use the PC for other purposes very frequently, or for a long enough usage period to cause a crash.

Things I have tried:

- Disabling automatic restarts on system failure, in hopes to get a better read (still restarts w/o notification)

- Undoing/redoing all cable connections

- Getting all OS + driver updates (latest drivers direct from manufacturer)

- Updating to the lastest BIOS

- Replacing cables and reseating hardware

- Running a complete memtest86 (100% pass with 0 failures)

- Disabling all "performance" features in BIOS for vanilla boot configuration

- Replacing PSU with a brand new unit

I have been actively monitoring the temperatures and load for the CPU, GPU, memory and storage. Everything is running within a reasonable range. I have attached a log file after the latest crash, which occurred after a gaming session (no games were running during the crash). If you go back ~30m you will see the system under load while playing Cyberpunk at max settings. The metrics are still all good, and since the crash occurred in even more optimal ranges, it is even more mysterious.

I used GenericLogViewer (v6.4) to look at the graphs from 1 hour prior to the crash (see screenshot), and nothing looks anomalistic - clicking through every sensor metric.

I am truly hoping that someone in the community can spot something I am not, or offer other suggestions. The only remaining things I can think to do are, replacing the MOBO, GPU and CPU, but I am truly hoping it doesn't come to that.

System specs:

- Motherboard: ASUS TUF Gaming B650-PLUS WiFi Socket AM5

- CPU: AMD Ryzen 7 7800X3D

- GPU: ZOTAC Gaming GeForce RTX 4070 Ti AMP Extreme AIRO

- RAM: CORSAIR VENGEANCE RGB DDR5 RAM 64GB (2x32GB) 6000MHz CL30

- Storage: Corsair MP700 2TB PCIe Gen5 x4 NVMe 2.0 M.2 SSD

- PSU: be quiet! Dark Power 13 1000W Quiet Performance Power Supply | 80 Plus Titanium Efficiency | ATX 3.0 | PCIe 5

- OS: Windows 11 Home Edition (although this issue also occurred with W10 before I upgraded).

PLEASE HELP! I am going crazy because the $$$ for this system is causing so much anxiety and I don't know what to do. Whoever solves this, I will buy you lunch ;-)

Thanks in advance.

Attachments

Last edited: